GStreamer H264/MP4 decoding C/C++ basics and encoding/decoding buffers manipulations

Exploring GStreamer and pipelines

Before proceeding to code review, let’s look at what we can do without it. GStreamer includes useful utilities to work with, in particular:

- gst-inspect-1.0 will allow you to see a list of available codecs and modules, so you can immediately see what will do with it and select a set of filters and codecs.

- gst-launch-1.0 allows you to start any pipeline. GStreamer uses a decoding scheme where a stream passes through different components in series, from source to sink output. You can choose anything as a source: a file, a device, the output (sink) also may be a file, a screen, network outputs, and protocols (like RTP).

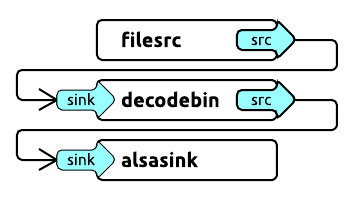

Simple example of using gst-launch-1.0 to connect elements and play audio:

1

gst-launch-1.0 filesrc location=/path/to/file.ogg ! decodebin ! alsasink

Filesrc will open file, decodebin - decode it, and alsasink will output audio.

Another more complex example of playing an mp4 file:

1

gst-launch-1.0 filesrc location=file.mp4 ! qtdemux ! h264parse ! avdec_h264 ! videoconvert ! autovideosink

The input accepts the mp4 file, which goes through the mp4 demuxer — qtdemux, then through the h264 parser, then through the decoder, the converter, and finally, the output.

You can replace autovideosink with filesink with a file parameter and output the decoded stream directly to the file.

Programming an application with GStreamer C/C++ API. Let’s try to decode

Now when we know how to use gst-launch-1.0, we are doing the same thing within our application. The principle remains the same: we are building in a decoding pipeline, but now we are using the GStreamer library and glib-events.

We will consider a live example of H264 decoding.

Initialization of the GStreamer application takes place once with the help of

1

gst_init (NULL, NULL);